Crossing Reality: Comparing Physical and Virtual Robot Deixis

Zhao Han* and Yifei Zhu* and Albert Phan and Fernando Sandoval Garza and Amia Castro and Tom Williams

*equal contribution co-first author

ACM/IEEE International Conference on Human-Robot Interaction (HRI, 2023)

Virtual arms – what are they really good at?

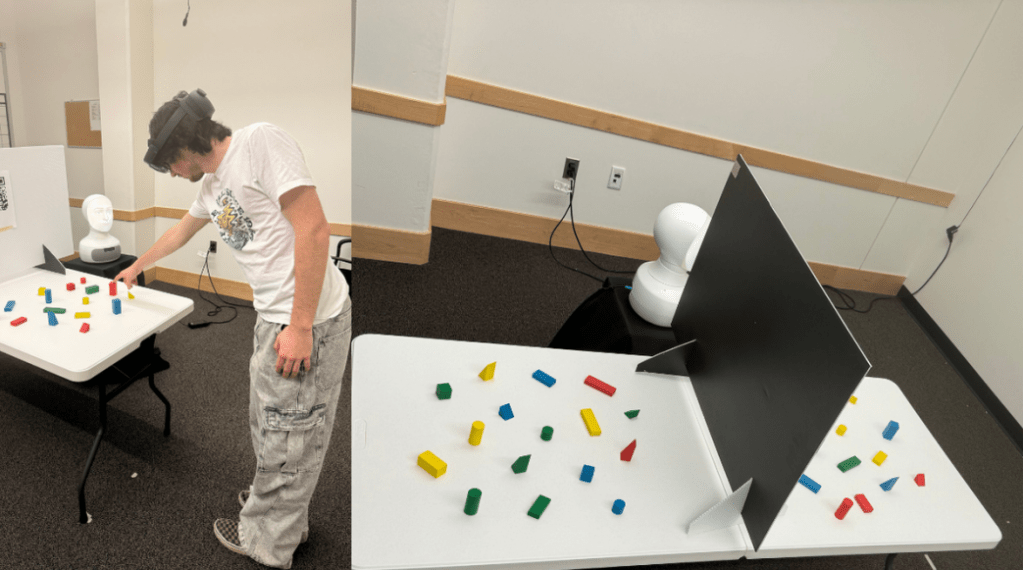

In this work, we present empirical evidence comparing the use of virtual (AR) and physical arms to perform deictic gestures that identify virtual or physical referents. Our subjective and objective results demonstrate the success of mixed reality deictic gestures in overcoming these potential limitations, and their successful use regardless of differences in physicality between gesture and referent.

Video presentation available here

Robots for Social Justice (R4SJ): Toward a More Equitable Practice of Human-Robot Interaction

Yifei Zhu and Ruchen Wen and Tom Williams

ACM/IEEE International Conference on Human-Robot Interaction (HRI, 2024)

How can we (HRI researchers and Roboticist) really pursue Social Justice?

In this work, we present Robots for Social Justice (R4SJ): a framework for an equitable engineering practice of Human-Robot Interaction, grounded in the Engineering for Social Justice (E4SJ) framework for Engineering Education and intended to complement existing frameworks for guiding equitable HRI research. To understand the new insights this framework could provide to the field of HRI, we analyze the past decade of papers published at the ACM/IEEE International Conference on Human-Robot Interaction. Based on the gaps identified through this analysis, we make five concrete recommendations, and highlight key questions that can guide the introspection for engineers, designers, and researchers.

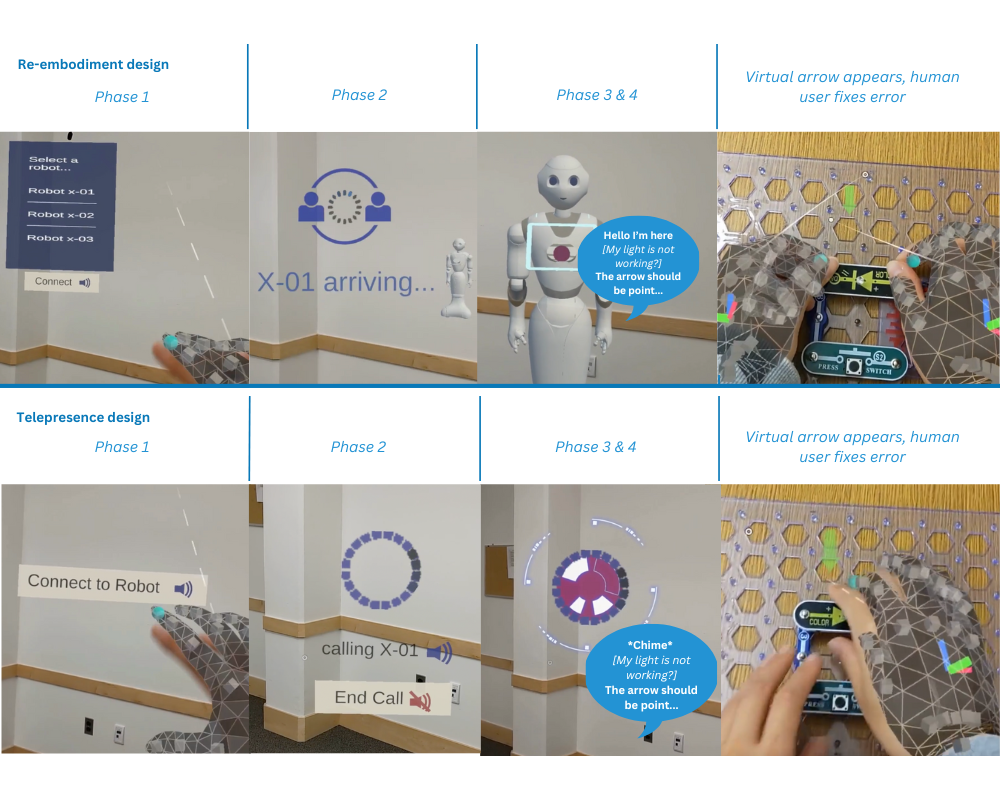

Designing Augmented Reality Robot Guidance Interactions through the Metaphors of Re-embodiment and Telepresence

Yifei Zhu, Colin Brush and Tom Williams

IEEE International Conference on Robot and Human Interactive Communication (ROMAN, 2024)

We text, call and video chat with friends and family when they are away, how do we enable this for remotely located robots?

Robots deployed into real-world task-based environments may need to provide assistance, troubleshooting, and on-the-fly instruction for human users. While previous work has considered how robots can provide this assistance while co-located with human teammates, it is unclear how robots

might best support users once they are no longer co-located.

We propose the use of Augmented Reality as a medium for

conveying long-distance task guidance from humans’ existing

robot teammates, through Augmented Reality facilitated Robotic Guidance (ARRoG). Moreover, because there are multiple ways that a robot might project its identity through an Augmented Reality Head Mounted Display, we identify two candidate designs inspired by existing interaction patterns in the humanrobot interaction (HRI) literature (re-embodiment-based and telepresence-based identity projection designs), present the results of a design workshop to explore how these designs might be most effectively implemented, and the results of a humansubject study intended to validate these designs.

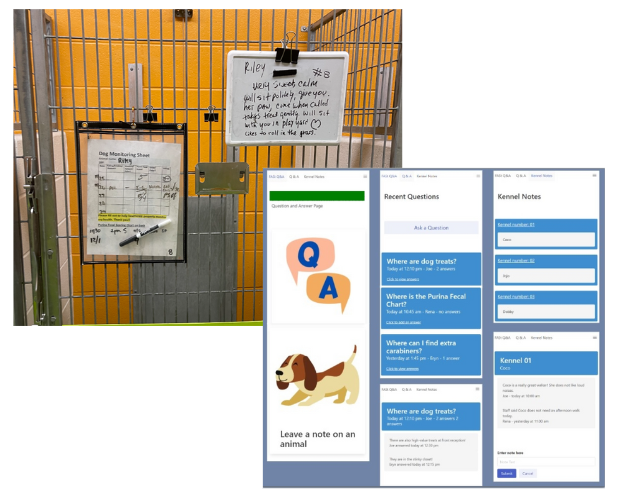

FETCH: Fostering and Enhancing Teamwork, Communication, and Healthy Community Among Animal Shelter Volunteers through Mobile Technology

Yifei Zhu, Joe Peak, Eryn Kelsey Adkins and C. Estelle Smith

ACM Conference on Human Factors in Computing Systems, Late Breaking Work (CHI LBW, 2024)

What are the needs and prioirities of Animal Shelter staff and volunteers concerning assisitve technology?

Although prior literature has explored technologies for generally supporting volunteer work, no studies have accounted for the specific and contextually-situated technology needs of animal shelters and the volunteers who keep them running. In this work, we: 1) conduct formative work (need-finding surveys, interviews) with a local animal shelter; 2) use an iterative human-centered design process to build a mobile app called FETCH catered to this community’s priorities; and 3) conduct user testing sessions to assess FETCH. We found that during shifts at the shelter, volunteers face challenges with communication and information management. We designed FETCH to help dog walkers with information management between shifts and community development. Users found FETCH practical, effective and accessible; Moreover, the results of this case study can help inform future projects that aim to develop technology for animal shelters and rescues which perform vital services for society and animal wellbeing.

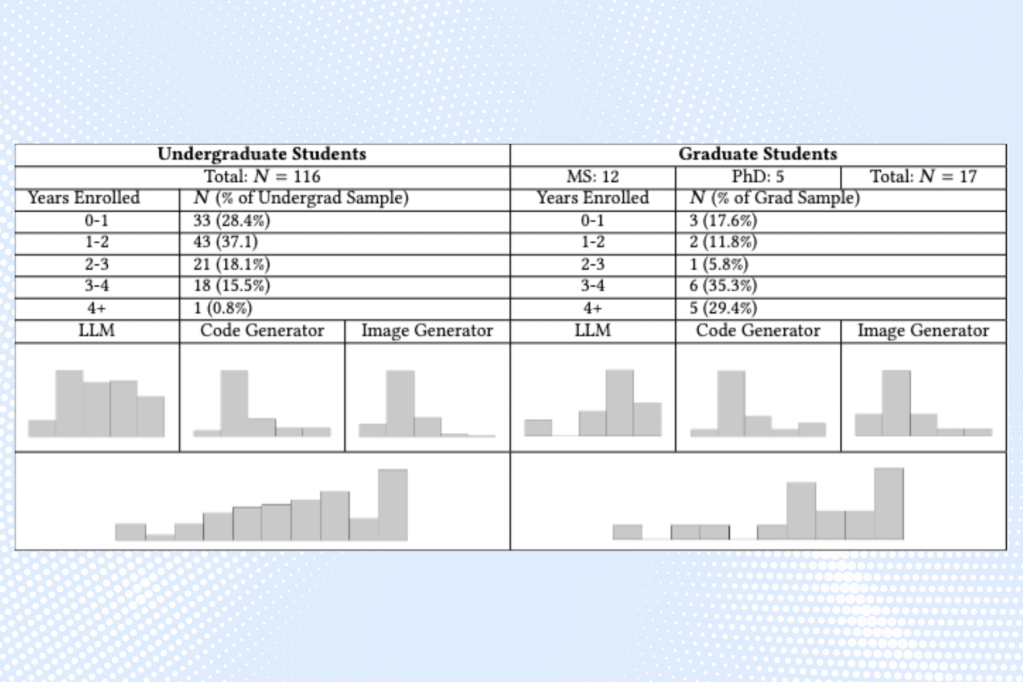

Early Adoption of Generative Artificial Intelligence in Computing Education: Emergent Student Use Cases and Perspectives in 2023

C. Estelle Smith, Kylee Shiekh, Hayden Cooreman, Sharfi Rahman, Yifei Zhu, et al.

Innovation and Technology in Computer Science Education V. 1 (ITiCSE 2024)

As GenAI tools become widely available, how are students actually using it? How should curriculum and policy adapt to this change?

With the rapid development and increasing public availability of Generative Artificial Intelligence (GenAI) models and tools, educational institutions and educators must immediately reckon with the impact of students using GenAI. There is limited prior research on computing students’ use and perceptions of GenAI. In anticipation of future advances and evolutions of GenAI, we capture a snapshot of student attitudes towards and uses of yet emerging GenAI, in a period of time before university policies had reacted to these technologies.

We surveyed all computer science majors in a small engineering-focused R1 university in order to: (1) capture a baseline assessment of how GenAI has been immediately adopted by aspiring computer scientists; (2) describe computing students’ GenAI-related needs and concerns for their education and careers; and (3) discuss GenAI influences on CS pedagogy, curriculum, culture, and policy. We present an exploratory qualitative analysis of this data and it’s implications.

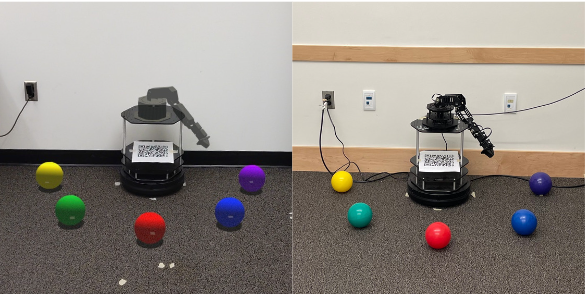

That’s Iconic! Designing Augmented Reality Iconic Gestures To Enhance Multi-modal Communication For Morphologically Limited Robots

Yifei Zhu, Alexander Torres, Zane Aloia and Tom Williams

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2025)

How can we use AR to enable alternative gestures for robots?

Robots that use gestures in conjunction with speech can achieve more effective and natural communication with human teammates, however, not all robots have capable and dexterous arms. Augmented Reality technology has effectively enabled deictic gestures for morphologically limited robots in prior work, however, the design space of AR-facilitated iconic gestures remains under-explored. Moreover, existing work largely focuses on closed-world context, where all referents are known a priori. In this work, we present a human-subject study situated in an open-world context, and compare the task performance and subjective perception associated with three different iconic gesture designs (anthropomorphic, non-anthropomorphic, deictic-iconic) against previously studied abstract gesture design. Our quantitative and qualitative results demonstrate that deictic iconic gestures (in which a robot hand is shown pointing to a visualization of a target referent) outperforms all other gestures on all metrics – but that non-anthropomorphic iconic gestures (where a visualization of a target referent appears on its own) is overall most preferred by users. These results represent a significant step forward to enabling effective human-robot interactions in realistic large-scale open-world environments.